The evolution of Generative AI and Large Language Models (LLMs) into Agentic AI has undoubtedly boosted productivity across various industries. However, this advancement also introduces a new set of risks for many enterprises, including unauthorized MCP servers, an increase in Non Human Identities (NHIs), and shared credentials that enable agents to impersonate humans.

These systems are following the trend of “consumerization of IT,” which offers a quick, easy, and relatively inexpensive way to set up and connect agents to tools and data without involving formal IT processes. This approach often results in expensing rather than going through the formal finance purchasing process.

A critical distinction often overlooked in the rush toward agent adoption is the difference between consumer and enterprise expectations around data use. Consumer AI tools are typically built on a model in which user interactions may be leveraged–directly or indirectly–to improve the vendor’s shared models. In contrast, enterprises require strict guarantees that their data will not be used for model training, will remain isolated from other tenants, and will be handled in accordance with regulatory, contractual, and security commitments. When employees adopt consumer-grade AI services to “get things done,” they may unknowingly introduce the risk of sensitive internal data entering training pipelines that fall far outside enterprise controls.

The public visibility and marketability of AI at the moment present significant challenges for IT and security departments. While it may seem tempting to ignore these issues or cover your ears, the fundamental principles still apply:

- Robust Inventory: Ensure you have a comprehensive understanding of all your servers, the software running on them, and the end-user devices.

- Robust Identity: Implement tools and processes to identify humans, non-humans, and associated roles and access permissions. Whenever possible, replace passwords with cryptographically secure authentication.

- Robust Access: Limit access to systems that are necessary for both humans and AI agents.

To mitigate these risks, IT and security departments should invest in areas such as identity management, Zero Trust Network Access, microsegmentation, and inventory management. With Procella’s ground breaking approach to cybersecurity assessments, we can help you to quantify, prioritize and justify investments that make the most out of your existing investments in systems, tools, and people.

Dashlane’s recently released 2025 survey report on anonymized passkey usage among its customers reveals significant momentum in passkey adoption and highlights a fundamental change in the authentication landscape, showcasing how passkeys are fundamentally disrupting traditional login methods

Key Findings on Passkey Adoption

The data clearly indicates a surge in passkey use across various sectors:

- Remarkable Growth: Roblox experienced an 862% increase in passkey usage.

- Enterprise Adoption: Services like HubSpot and Okta are enabling and seeing rapid adoption of passkeys.

- Default Authentication: Retail giants, such as Amazon, saw huge adoption after making passkeys the default authentication method for their mobile users.

- User Penetration: A notable 40% of Dashlane users now have at least one passkey stored.

The Passkey Advantage: Security and User Experience

Passkeys are gaining traction because they offer a rare combination of benefits: they are more secure than passwords and more robust than existing Multi-Factor Authentication (MFA) technologies. They enhance security while reducing user friction.

As Alyssa Robinson, CISO of HubSpot, noted:

“We have also seen a 25% improvement in login success rates over passwords, as well as a 4x faster time to login compared to passwords and 2FA.”

Procella’s Stance on Passwordless Authentication

Procella firmly supports the move to passwordless authentication, especially passkeys.

- If you utilize Single Sign-On (SSO) providers like Google or Okta, implementing passkeys can be a smooth transition that improves overall user experience.

Planning for Passkey Deployment

While many of the benefits are clear, adopting passkeys in an enterprise environment requires careful planning, particularly regarding passkey storage.

- Operating System (OS) and browser vendors—such as Apple, Google, and Microsoft—offer their own built-in passkey stores.

- However, enterprises often need to avoid vendor lock-in to a single OS or browser. This is where password managers with advanced enterprise features, like Dashlane and 1Password, can provide platform-agnostic solutions.

Procella maintains a strict vendor-neutral approach, preferring to integrate with your existing technology stack rather than recommend a disruptive “forklift” replacement. That said, we are proud to be a 1Password Managed Services Provider for customers who choose 1Password as their Enterprise Password Manager.

Contact us to learn how Procella can help you plan your transition to a more secure, passwordless future.

This post on Mastodon by SwiftOnSecurity applies to all “social logins” and federated authentication including SSO (single sign on). For enterprises, it’s a reminder that all security decisions are risk balancing exercises. We at Procella are unabashed advocates for SSO but that means choosing the SSO provider and platform carefully and pairing it with both an identity management system and a log management system. Ultimately a reputable SSO provider is likely to be a safer choice than social logins due to their focus and significantly better than individual users managing their own identity at many diverse applications.

Sophocles said, “No enemy is worse than bad advice."

If we assume that Sophocles was referring to actually acting on bad advice, it is easy to see how his sage words can be applied to Information Security some 2400 years after his death. In the first of a series on dealing with bad advice from an InfoSec perspective, Procella will examine an area that has a long history of misinformation: Password Hygiene.

Before examining an example of poor guidance one of Procella’s principals recently encountered personally, it’s important to note that passwords are often the elephant in the room. In most cases, Procella strongly advocates for moving away from traditional passwords in favor of passkeys or other FIDO2 authenticators.

With that PSA out of the way, on to the example:

For security purposes, passwords expire after 90 days. The data in our website is called Protected Health Information, or PHI; HIPAA (Health Insurance & Portability Act of 1996) requires the highest level of protection for this type of data.

The healthcare organization in question acknowledges the importance of protecting ePHI data, which is commendable. However, does expiring passwords every 90 days truly offer “the highest level of protection,” or is this practice just an example of the illusory truth effect–a phenomenon where repeated exposure to false information makes it seem true? Unfortunately, disinformation can persist even after it’s debunked because our brains tend to equate familiarity with truth. This seems to be the case with how many organizations handle password lifecycles. Despite numerous recommendations—and even mandates—from respected agencies and experts, backed by ample empirical evidence, many organizations still hold on to the belief that passwords should be rotated on a fixed schedule.

Back in 2015, one of the organizations that later became the UK’s National Cyber Security Centre (NCSC) published a list of recommendations titled “Password Guidance: Simplifying Your Approach." That document included seven tips that remain relevant today, with some adjustments made for the rise of remote workers and cloud-based services.

The seven tips:

- Tip 1: Change all default passwords

- Tip 2: Help users cope with password overload

- Tip 3: Understand the limitations of user-generated passwords

- Tip 4: Understand the limitations of machine-generated passwords

- Tip 5: Prioritise administrator and remote user accounts

- Tip 6: Use account lockout and protective monitoring

- Tip 7: Don’t store passwords as plain text

Let’s dig a little deeper into each of these tips:

Tip 1 is self pretty self-explanatory but just in case: default passwords should be changed immediately if there is any chance that a device will ever be accessed by anyone other than the intented user(s). Most obviously, this applies to anything that may be connected to a public or private network (including PCs, printers, IoT devices, etc.), as those passwords are known to every botnet and scanner in existence. However, this also applies to airgapped devices that may contain any kind of sensitive information or that could be used to collect it. Kiosks, libraries, that old electronic diary that’s in a box somewhere. The electronic recipe book with Oma’s secret recipe in it? Basically anything that can be perused, stolen, shared or otherwise accessed by anyone but a legitimate user of the system should have its default password changed and securely recorded. Which brings us to tip 2:

Tip 2 recommends using password managers (with appropriate caution, as they are valuable targets) and discarding the outdated practice of changing passwords on a fixed schedule.

Most administrators will force users to change their password at regular intervals, typically every 30, 60 or 90 days. This imposes burdens on the user (who is likely to choose new passwords that are only minor variations of the old) and carries no real benefits as stolen passwords are generally exploited immediately. Long-term illicit use of compromised passwords is better combated by:

- monitoring logins to detect unusual use

- notifying users with details of attempted logins, successful or unsuccessful; they should report any for which they were not responsible

Regular password changing harms rather than improves security, so avoid placing this burden on users. However, users must change their passwords on indication or suspicion of compromise.

Tip 3 addresses the problems with human-generated passwords and advises against enforcing complexity rules, which often lead to predictable substitutions. Instead, the focus should be on blocking common passwords and educating users on how to create strong ones. Procella also recommends increasing the minimum password length and removing any maximum length restrictions for systems that support it, as long as those systems don’t truncate passwords.

Tip 4 suggests making machine-generated passwords memorable, though Procella generally advises against this. While machine-generated passwords offer significant security advantages, they can be hard to remember, placing unnecessary burden on users (see Tip 2). Procella instead recommends memorizing only a few key passwords, such as those for unlocking your computer or accessing your password vault, while securely storing the rest in the vault without attempting to memorize them.

Tip 5 advises against using administrator accounts for day-to-day operations, which remains best-practice advice. However, it limits the recommendation for additional authentication (such as tokens) to remote workers. Procella, on the other hand, recommends Multi-factor Authentication (MFA) or Passkeys for all human-operated accounts, not just those used by “remote workers.” With cloud services, every worker is effectively remote, regardless of their physical location.

Tip 6 suggests throttling failed login attempts rather than locking accounts, to improve user experience and prevent easy denial-of-service attacks by malicious actors. Additionally, there are now more effective ways to minimize brute-force attacks without disrupting users or business processes.

Tip 7 is completely self explanatory and could easily be considered a subpoint of TIp 1.

As illustrated by the NCSC list, the shift away from scheduled password rotations is not new. In fact, this advice is nearly 10 years old—a significant period in the evolution of information security—but it remains surprisingly relevant today!

The latest guidance from NCSC now has 6 tips:

Many of the tips are repeated, included Procella’s favorite: reducing reliance on passwords altogether.

Some strong guidance from the UK but how about the US?

In 2017, NIST published password guidance in Special Publication 800-63B, section 5.1.1 Memorized secrets. The language is verbose but had similar guidance to NCSC at the time. In August of 2024, NIST also published a draft update to 800-63B that simplified some of the language from the previous publication and strengthened a number of “SHOULD NOT” recommendations to “SHALL NOT” requirements, most notably related to password rotations and composition rules (e.g., requiring mixtures of different character types). In other words, to comply with NIST standards (as emphasized by Procella), users must not be required to change passwords periodically.

The updated requirements include:

3.1.1.2 Password Verifiers

The following requirements apply to passwords:

- Verifiers and CSPs SHALL require passwords to be a minimum of eight characters in length and SHOULD require passwords to be a minimum of 15 characters in length.

- Verifiers and CSPs SHOULD permit a maximum password length of at least 64 characters.

- Verifiers and CSPs SHOULD accept all printing ASCII [RFC20] characters and the space character in passwords.

- Verifiers and CSPs SHOULD accept Unicode [ISO/ISC 10646] characters in passwords. Each Unicode code point SHALL be counted as a single character when evaluating password length.

- Verifiers and CSPs SHALL NOT impose other composition rules (e.g., requiring mixtures of different character types) for passwords.

- Verifiers and CSPs SHALL NOT require users to change passwords periodically. However, verifiers SHALL force a change if there is evidence of compromise of the authenticator.

- Verifiers and CSPs SHALL NOT permit the subscriber to store a hint that is accessible to an unauthenticated claimant.

- Verifiers and CSPs SHALL NOT prompt subscribers to use knowledge-based authentication (KBA) (e.g., “What was the name of your first pet?") or security questions when choosing passwords.

- Verifiers SHALL verify the entire submitted password (i.e., not truncate it).

Many other organizations, including Microsoft and the US FTC, have adopted similar policies and/or made similar recommendations while quoting numerous studies and other empirical evidence. Passkeys and other technologies that either replace or supplement traditional passwords continue to gain traction. However, many companies still require their customers and users to follow outdated and unsafe practices for managing their credentials. If only Sophocles were alive to offer his guidance.

In closing, in case you were wondering, the site that was the impetus for this post does have its own set of password requirements:

Minimum 8 characters

Must not contain username in any part of it

Cannot be the same as any of the last 10 passwords used

At least 3 of the 4 following conditions must be present:

One upper case

One lower case

One number

One special character (~!@#$%^&*_-+=`|(){}[]:;.?/)

Unfortunately, in addition to several of their requirements not being NIST-compliant, they also do not offer MFA or any security stronger than a password, relying instead on the implicit trust typically given to healthcare providers to justify its questionable policies. This underscores the importance of considering the source when evaluating advice.

Should the healthcare organization in question update their password policy? Probably, since they are claiming compliance where it doesn’t exist. Should you rush to change your password policy? Perhaps, but it’s likely you’ll need to adjust other policies as well, and prioritizing this within your already busy cybersecurity program can be challenging. Procella provides assessments and expert guidance to help you develop a strategic roadmap for enhancing your security posture.

If you use YubiKeys, you have probably heard about the recent side-channel vulnerability discovered by NinjaLab. But what does the vulnerability really entail, what is the real-world impact, and what—if anything—should organizations and individuals do to safeguard themselves?

Let’s start with the bad news (spoiler alert: it’s not as bad as it sounds at first glance). This vulnerability is present in all YubiKeys purchased before May 2024, and it allows an attacker to potentially make a copy of your YubiKey.

Sounds alarming, right? So what’s the good news, and why all the fuss?

The good news is that you or your organization likely aren’t at much more risk than before the vulnerability was disclosed. That’s not to say there is no risk or that there aren’t steps you can take to better safeguard your environment (more on that later), but it does mean that this vulnerability does not pose an existential threat to YubiKeys or physical authenticators in general.

In fact, Yubico has assigned a Moderate severity rating to the vulnerability, and it has a relatively low CVSS score of 4.9. In Yubico’s own words:

A sophisticated attacker could use this vulnerability to recover ECDSA private keys. An attacker requires physical possession and the ability to observe the vulnerable operation with specialized equipment to perform this attack. In order to observe the vulnerable operation, the attacker may also require additional knowledge such as account name, account password, device PIN, or YubiHSM authentication key.

(While Yubico and others have mentioned that additional knowledge and/or credentials may be required to “observe the vulnerable operation,” Procella feels it’s safe to assume that anyone capable of executing this attack is also likely capable of obtaining the necessary additional knowledge.)

With these details in mind, it’s easier to understand why this vulnerability isn’t as concerning as it may have initially seemed. Not only must the attacker physically take possession of the target YubiKey (while also having access to the expensive equipment required to exploit the vulnerability), but they must also physically open the device, risking visible damage that could raise red flags. All of this would need to happen without the device’s owner realizing their key was taken and returned.

As mentioned earlier, while the barrier to entry for this exploit is high, it doesn’t mean there is zero risk. For example, if you are targeted by a well-resourced threat actor (such as a nation-state), you could be at risk and should take extra precautions. However, if you or your organization is a likely target for such sophisticated attacks, you are probably already implementing additional security measures.

Because the attack requires physical access to your key and the ability to return it without your knowledge, the usual “economies of scale” don’t apply here. Unlike other attacks where attackers can use hardware to repeatedly crack passwords for widespread credential-spraying attacks, this vulnerability must be targeted at specific individuals. Additionally, the attacker must be physically present, which significantly increases their risk of getting caught.

So, what should you do about this vulnerability? First off, don’t panic. Second, continue using your YubiKey. It still provides significant protection against phishing and compromised passwords, and switching to another solution would be unwise at this point. Other MFA solutions offer similar protection against compromised passwords, but they lack the phishing resistance offered by FIDO-based technologies like YubiKeys.

If you’re part of an Enterprise IT or Security team, consider increasing the frequency of requiring users to re-authenticate with their YubiKeys. This reduces the window of time a cloned key could be used, minimizing the risks. It’s also important to educate users on how and why they should promptly report and revoke lost, stolen, or damaged YubiKeys.

You might also consider planning to implement and eventually switch to Passkeys, which are FIDO2 credentials stored in software rather than hardware. Microsoft refers to hardware FIDO2 tokens as “device-bound passkeys” and software-based ones as “synced passkeys,” although the industry now refers to them simply as Passkeys. The key limitations of Passkeys, based on Procella’s experience, are expected to be resolved soon:

- Microsoft currently only supports “device-bound” passkeys, which are hardware-based. However, Microsoft has added support for Microsoft Authenticator to function as a device-bound passkey. Microsoft plans to support synced passkeys, which are software-based, in 2024 for both consumer accounts and Entra ID.

- Once Passkeys are created and saved in a password manager, they cannot be transferred to another password manager. However, the FIDO Alliance is working on enabling this functionality. For example, a user might create a Passkey in Chrome, but their company may want all Passkeys to be stored in 1Password. This limitation is expected to be addressed in the future.

Key takeaways:

- A side-channel vulnerability exists in YubiKeys purchased before May 2024, which could allow an attacker to clone a key, but the likelihood is low due to the complexity of the attack.

- The real-world risk is pretty low. If you don’t lose your YubiKey or leave it unattended, it can’t be cloned. Even if you were to lose a key or leave it unattended for an extended period, the risk can be mitigated by immediately removing the key as a valid authenticator.

- Don’t panic and don’t stop using your YubiKey. It still provides significant protection against phishing and compromised passwords.

- Consider a plan to switch to Passkeys, which are FIDO2 credentials stored in software rather than hardware.

If you’ve read this and are not yet using YubiKeys or Passkeys, now is a great time to get started. However, implementing them may not be the best first step for your organization. Procella offers a variety of assessments and expert guidance to help you develop a strategic roadmap for improving your security posture.

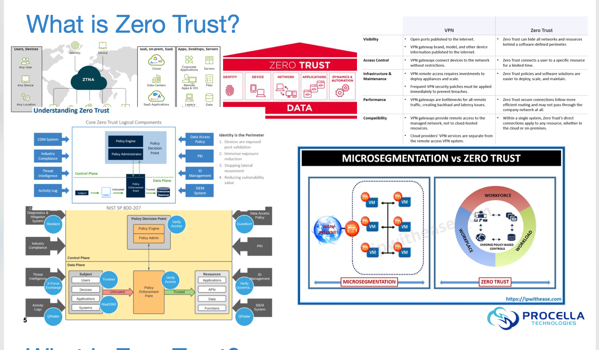

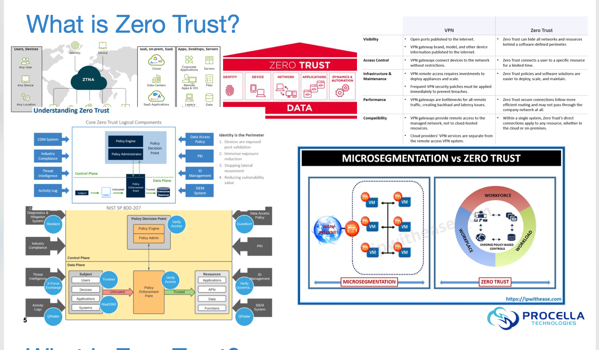

We were recently asked what our definition of Zero Trust is. This should be on our website, so it is now at /zerotrust and as this blog post.

The term Zero Trust was originally coined by Jon Kindervag, then with Forrester, way back in 2009 but it has since come to mean many things to many people.

Procella looks at Zero Trust from many different lenses. It is a strategy but it’s also an architecture and even a philosophy. At its core, Zero Trust means not trusting any entity, at any time, from any place. There is no inside and no outside, the concept of a perimeter no longer exists. People, devices, and networks should constantly be reevaluated to ensure least privilege access to only the systems and data required to complete a job or task. A Zero Trust mindset leverages identity, access, micro segmentation and continuous authentication to contain inevitable breaches and to allow security teams to enable businesses while securing users, systems and data.

Zero Trust is also a journey, not an endgame. Businesses and technologies evolve and a company’s Zero Trust strategy must be nimble enough to continuously evolve without constantly requiring new goals or shifting priorities. This strategy should a an organizational guiding principle that all future technology investments are measured against and must adapt to, rather than an afterthought or an obstacle that new projects must be shoehorned into just to satisfy a mission statement or a set of MBOs.

Spending the morning at Akamai refreshing on Guardicore. Best part is meeting customers and learning their segmentation desires and goals.

The “SSO tax” is particularly onerous for small businesses who do not need a full enterprise offering (and the associated costs), which is why Procella gravitates towards SSO-friendly vendors and partners wherever and whenever possible

Single sign-on (SSO) is a method of authentication that allows users to access multiple applications with a single set of login credentials. These credentials are entered into a “centralized” login server (“identity provider” or “IDP”). Applications (“service providers” or “SP”) then refer to the IDP to obtain authentication and/or authorization tokens on behalf of the user. When we talk about SSO using a single set of credentials this federated model is what we are referring to, not using the same credentials everywhere or using a pass through authentication like LDAP where the applications have the opportunity to see your credentials on the way through to the authentication server. The common protocols used in SSO are SAML and to a lesser (but increasing) degree OIDC. The “social sign in with…” options often make sense for consumer applications (with careful consideration of which social provider to choose… Apple, Google and Microsoft are more safe/stable choices than Twitter or Facebook), however Passkeys (a cryptographic, passwordless, proof of identity) is far more portable and future proof than any social sign in options.

The SSO tax refers to the additional cost that organizations may incur when implementing SSO. The primary driver of this cost (and what many people take as the definition of SSO tax) is that many SaaS providers lock SSO behind higher cost tiers - making it more expensive and difficult for organizations to take advantage of this feature. This is a regressive tax - it most often impacts smaller to medium sized businesses - those that would have no need for the higher cost tiers besides the SSO functionality. The SSO Wall of Shame has a list of examples - ranging from a 15% increase to a mind boggling 6300%!

When SSO was a new requirement from enterprises to SaaS providers, some uptick might have been reasonable (but not 6300%) to cover some development costs and increases in support calls. However, SSO has been a requirement from large enterprises for many years now and it’s time for the costs to flip. A SaaS provider utilizing SSO reduces its overall cyber risk. It won’t directly be vulnerable to credential stuffing attacks, won’t have credentials to lose and won’t need to invest in a custom MFA solution. Conversely, creating financial incentives for customers to use SSO with their applications can shift the burden of defending against credential stuffing, risk management and MFA enforcement back on their customers.

In that world, the main cost associated with SSO becomes the need for additional infrastructure and resources. Organizations may need to purchase and maintain servers and other hardware to support the SSO system, which can add to the overall cost of the system. Additionally, SSO systems may require additional software and licensing fees, which can also add to the cost. These costs can be reduced by utilizing a commercial, hosted SSO provider. Even the lowest business tier Microsoft365 or Google Workspace accounts provide very capable SAML identity providers. For more specialized SSO solutions, Okta is a very popular option. Another example is Duo Security, who provide an SSO identity provider to make it easier to integrate their MFA solution.

Another cost associated with SSO is the need for specialized personnel to manage and maintain the system. Organizations may need to hire additional staff or contract with third-party vendors to manage and maintain the SSO system, which can add to the overall cost of the system.

Despite the SSO tax, many organizations choose to implement SSO systems because of the benefits they provide. SSO systems can help to improve security by reducing the number of login credentials that users need to remember and manage. Having a centralized identity provider is a solid foundation to build a Zero Trust environment from - one place to enforce multi factor (or move to a passwordless solution) as well as an opportunity to perform granular authorization of both users and devices. Additionally, SSO systems can help to improve productivity by allowing users to access multiple applications with a single set of login credentials.

In conclusion, the SSO tax refers to the additional cost that organizations may incur when implementing SSO systems. While the SSO tax can be significant, many organizations choose to implement SSO systems due to the benefits they provide such as improved security and productivity. Procella strongly believes in SSO as a fundamental part of an organizations security posture, but those organizations should be aware of the costs involved before starting on an SSO project, and push their SaaS providers to include standards based single sign-on at base level tiers. Procella calls for all SaaS providers to ditch their component of the SSO tax and lead from the front in freely supporting and encouraging enterprise SSO and consumer passwordless authentication methods.

Today is an exciting day for Procella as Akamai has announced that it will be adding Guardicore’s micro-segmentation technology to its growing Zero Trust product portfolio. We strongly believe that micro-segmentation is a key component of a comprehensive Zero Trust (ZT) strategy and are thrilled that Akamai (and Procella) customers will be able to leverage Guardicore’s microseg solution as part of a holistic ZT architecture.

We have known the Guardicore team for several years now and we are really looking forward to working with them as part of our expanded Akamai-based portfolio. Combining Micro-segmentation with Zero Trust Access, Enterprise Threat Protection (DNS firewall, Secure Web Gateway), Web Application Firewall and Multi-factor Authentication gives Akamai and Procella customers a comprehensive and compelling arsenal for combatting a constantly evolving threat landscape.

What is Micro-Segmentation?

We have known for years now that a “flat network” is an attacker’s dream. Being able to convert a single beachhead on a laptop or dev server into full layer2/3 access to everything in the environment, including the “crown jewels” or most sensitive data, makes defending against breaches—and their inevitable spread–almost impossible.

The traditional recommendation has always been to simply break up the network and put some firewalls or ACLs between segments. In this scenario, you end up with some high-trust zones, some low-trust zones, and a false sense of security that’s almost amusing in its naivete. At a (very) simplistic level, having one or more networks for your end users and a few more networks for your servers is a solid first step. Perhaps you then only allow the users to talk to well-defined applications on the servers and you (mostly) don’t allow the servers to initiate communication back to the users. Ok, sounds good. The next iteration might include taking a closer look at the servers supporting those crown-jewel applications. Nobody will argue with segmenting out the crown jewels, right? You also block access to them from random other servers in the environment (while continuing to allow access from all of user land). You keep adding firewalls and policies. The more segmented the network, the more protected you are against a major breach should you find a malicious actor in your network.

At least that’s the theory.

Sure, you’ve corralled your most precious assets together, which theoretically protects them from their less “trustworthy” brethren, but you’ve also made it incredibly convenient for an adversary to move laterally through your most trusted enclaves once they’ve established a foothold. Oops

The problem is that, while application owners and users talk in terms of applications, the network and security teams (and their firewalls/VLAN ACLs) talk in terms of server IP addresses and port numbers. To compound this, there’s very little actionable discovery available in this type of environment. You’re left to take your best guess at what is required and then decide on the lesser of two evils: you can either break everything and deal with the understandably irate application owners and end users or you can hold your nose, allow everything else and log the traffic, all with the good intention of going back at some later date and “fixing” things. Oh, and those logs are once again IPs and port numbers, not applications.

In addition, in today’s world, applications are rarely isolated from other applications (for example many applications may need to talk to Active Directory), so a minor change in one application environment will require updating firewall rulesets far away from that application. And across data centers. And clouds.

Yes, this all sounds like something that should be solvable with orchestration, but that still leaves the elephant in the room—all of the systems/applications/databases in the same broadcast domain have unfettered access to each other.

This is where agent-based micro-segmentation shows its strength. With an easy-to-deploy agent, Guardicore Centra allows you to quickly discover application traffic flows across your environment via an intuitive UI (along with a robust API). From there, you can quickly and easily segment your high value assets, or quickly apply stringent lockdown policies to contain a ransomware outbreak. You identify your most critical protect surface(s) and go from there, taking the guesswork and legacy permit-and-log entries out of the equation. It’s a pragmatic approach to east/west traffic segmentation that compliments traditional north/south firewalls while building in visibility and compliance.

Crystal Ball Gazing

Procella is passionate about Zero Trust. We are incredibly excited by the opportunity to combine Akamai’s existing portfolio with Guardicore’s advanced micro-segmentation solution to provide an even more extensive set of offerings to our customers. The future is now and the pressure is on Akamai to integrate this powerful segmentation technology and really show the power of a fully-integrated Zero Trust platform.

Forrester Research has a good break down of the executive order and what it means for federal agencies. They also include a warning about “a Laundry List Of Technologies With A Zero Trust Bumper Sticker” and “old ‘new’ vendors” who “represent the issues we should be running away from, not toward”.

These warnings don’t only apply to federal agencies. Your enterprise leadership and board may be making Zero Trust noises. Do you have a partner to separate the wheat from the chaff? Procella is standing by, ready to engage.

When the pandemic sent everyone home, many companies who had not allowed remote work previously were faced with a decision. Enable remote access or completely shut down. Even companies with a restrictive remote work policy were backed into a corner and required to open it up to a wider range of employees and contractors.

As these same companies plan to open back up, they’re now faced with a new reality. What was previously thought of as impossible, difficult or unproductive has in fact carried their company through an entire year, and although most staff members will be eager to get back into the office in some manner - they also now hope for some form of remote work to remain available.

When faced with first enabling remote access all that time ago last year, the advice you were hearing from your technology partners was probably to “set up a VPN.” Maybe your IT team had a small “break-glass” setup already in place that just needed to be scaled out. Or perhaps you had heard of Zero Trust network access (ZTNA) or Software Defined Networking (SDN), but you’d also heard those are journeys not solutions. You needed a quick fix, so that’s what you chose…. and your business survived, so that’s awesome news!

But (why is there always a “but” with awesome news?) …not so fast. One of the immediate side effects of this suddenly-mobile global workforce was an exponential increase in attacks aimed specifically at remote workers, many of whom were unfamiliar with—and ill-prepared for–the risks inherent in working outside the perimeter of the corporate network. Bad actors, always looking for fresh targets, crafted new exploits and campaigns designed to take advantage of unsuspecting users to try to gain footholds in environments that may have traditionally been out of reach or at least more difficult to obtain. Despite a steady drop over the last several years in the time between breach and detection, or dwell time, according to the Verizon Data Breach Investigation Report of 2020, roughly 25% of breaches still go undetected for months or more, so it’ll likely be quite a while before we understand the true impact of these attacks. In the unfortunate event of a breach, companies with a solid Zero Trust strategy will at least be able to minimize the collateral damage.

Regardless of where you are in regards to your remote workforce, it’s never too late to start planning. If employees are more engaged and are enjoying the benefits of an improved work-life balance, chances are that they’re more productive. Even if you wanted to, the longer that employees have the flexibility to work remotely, the more difficult it will be to put the proverbial genie back in the bottle. In most cases, the question shouldn’t be whether or not you continue to offer remote access but how do you do it in the most secure manner possible? Is VPN, a decades-old technology that essentially merges employees’ home networks with your enterprise network, really the right long-term answer? (Hint: It’s not.)

VPN is a product of castle-and-moat thinking, which doesn’t reflect the current norms of clouds, social networks and the consumerization of IT. Do all of your staff need the same level of access to all your systems as your IT administrators? While that question is obviously rhetorical, let’s get serious–if your network was not designed to be accessed remotely, there are almost certainly assumptions baked into the (lack of) security models around your applications. For that matter, even if it was designed with remote access in mind, was it designed for remote access in 2021?

That’s (one of the areas) where a Zero Trust mindset comes in. Never trust, always verify. Protect your critical data with:

- Strong authentication controls to ensure that the user is a legitimate staff member.

- Strong device posture controls to ensure that the device is a company laptop (or, if you allow BYOD, that it’s a well-maintained laptop).

- Strong authorization controls to ensure that the staff member is authorized to access the application they’re trying to reach; from the device that they’re using; at the time that they’re online; from where they’re located.

ZTNA is also a foundational element of the Secure Access Service Edge (SASE). We’ll cover SASE in more detail in a future blog entry, but, at a high level, SASE is the marrying of networking and security functions in a cloud-native platform. Regardless of where your applications live, the sooner that you embrace SASE, the better, and there’s no better place to start than with ZTNA.

Partnering with the experts at Procella will allow you to develop a comprehensive roadmap to an agile workforce without compromising the safety and security of your company’s most important digital assets.

Coming from the trenches of Zero Trust deployments, the founders of Procella believe Zero Trust concepts can:

- Reduce the scope and impact of the inevitable breaches

- Improve user experience and help users make the safe choice

- Safely enable more agile workforces and workflows

We also know that Zero Trust is a journey, not as simple as just installing a product, despite what the vendor marketing might imply. We’ve taken this journey, let us partner with you to help you avoid the dead ends, diversions and wrong turns.