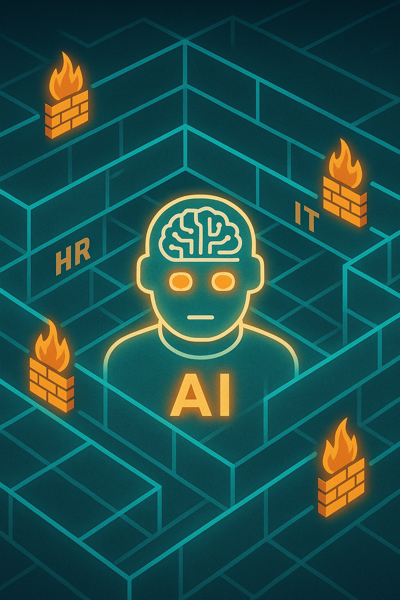

The evolution of Generative AI and Large Language Models (LLMs) into Agentic AI has undoubtedly boosted productivity across various industries. However, this advancement also introduces a new set of risks for many enterprises, including unauthorized MCP servers, an increase in Non Human Identities (NHIs), and shared credentials that enable agents to impersonate humans.

These systems are following the trend of “consumerization of IT,” which offers a quick, easy, and relatively inexpensive way to set up and connect agents to tools and data without involving formal IT processes. This approach often results in expensing rather than going through the formal finance purchasing process.

A critical distinction often overlooked in the rush toward agent adoption is the difference between consumer and enterprise expectations around data use. Consumer AI tools are typically built on a model in which user interactions may be leveraged–directly or indirectly–to improve the vendor’s shared models. In contrast, enterprises require strict guarantees that their data will not be used for model training, will remain isolated from other tenants, and will be handled in accordance with regulatory, contractual, and security commitments. When employees adopt consumer-grade AI services to “get things done,” they may unknowingly introduce the risk of sensitive internal data entering training pipelines that fall far outside enterprise controls.

The public visibility and marketability of AI at the moment present significant challenges for IT and security departments. While it may seem tempting to ignore these issues or cover your ears, the fundamental principles still apply:

- Robust Inventory: Ensure you have a comprehensive understanding of all your servers, the software running on them, and the end-user devices.

- Robust Identity: Implement tools and processes to identify humans, non-humans, and associated roles and access permissions. Whenever possible, replace passwords with cryptographically secure authentication.

- Robust Access: Limit access to systems that are necessary for both humans and AI agents.

To mitigate these risks, IT and security departments should invest in areas such as identity management, Zero Trust Network Access, microsegmentation, and inventory management. With Procella’s ground breaking approach to cybersecurity assessments, we can help you to quantify, prioritize and justify investments that make the most out of your existing investments in systems, tools, and people.